Overview |

|

|

|

Test Methods |

Basic Concepts |

For a more detailed discussion of the structure of "Test Methods" and "Tests", see Adding and Maintaining Tests. This discussion focuses specifically on creating and maintaining "Test Methods".

A "Test Method" is actually a "Workitem" SDI. Accordingly, all Test Method functionality and structure is based on the Workitem SDC. LabVantage maintains "Test Methods" and "Tests" internally as "Workitems" and "SDIWorkitems", respectively. Some areas of the user interface also interchange this terminology.

These definitions apply:

| • | A "Test Method" is a container that can host Parameter Lists, Specifications, and other Test Methods. When you add an instance of a Test Method (which is a "Test") to a Sample, you are adding these items to the Sample. For example, if a Test Method contains two Parameter Lists and a Specification, both Parameter Lists and the Specification will be added to the SDI as a "Test". Tests are maintained in the SDIWorkitem table. The information concerning the Parameter Lists and Specification are maintained in the "SDIWorkItemItem" table. |

| • | A "Standard Test Method" can host Parameter Lists and Specifications. |

| • | A "Test Method Group" can host Parameter Lists, Specifications, and other "Standard Test Methods". |

After creating a Test Method, you can add an instance of it ("Test") to Samples as described in Adding and Maintaining Tests.

Test Method Form |

|

|

Example |

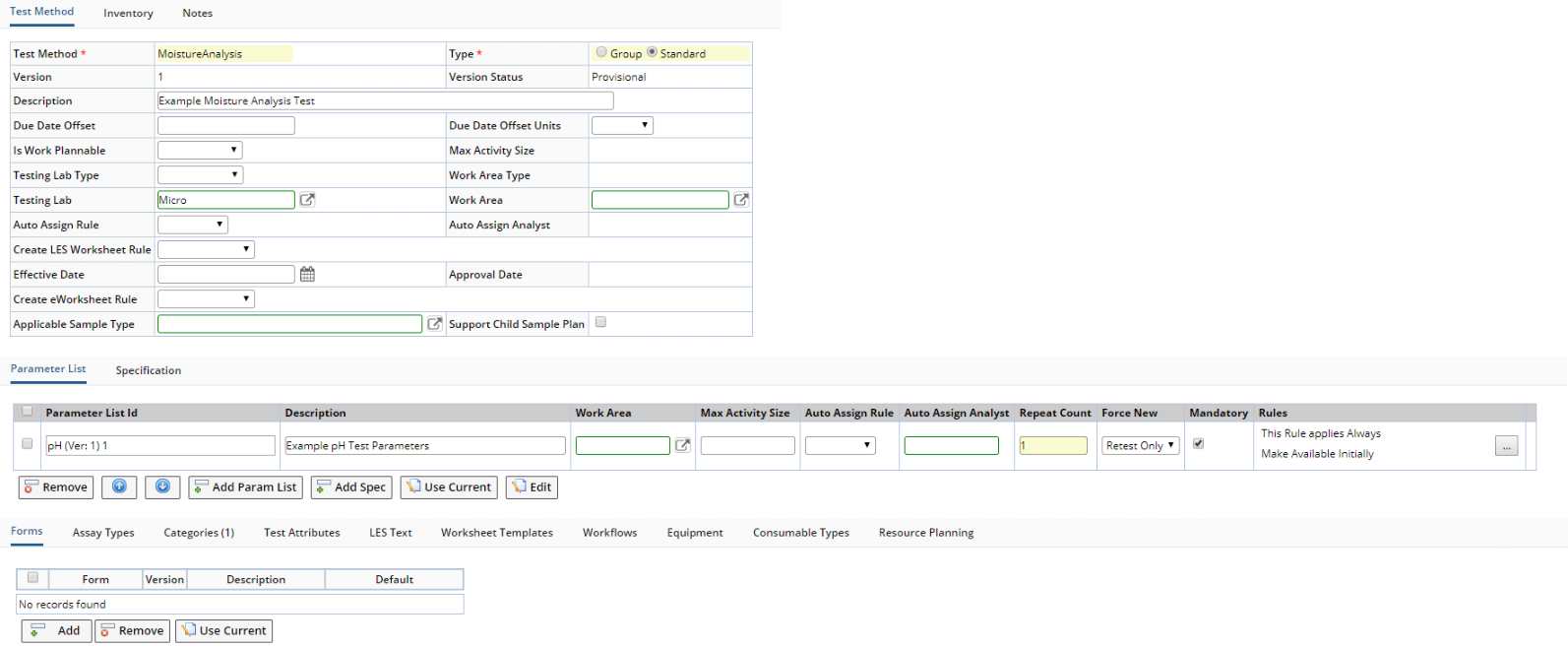

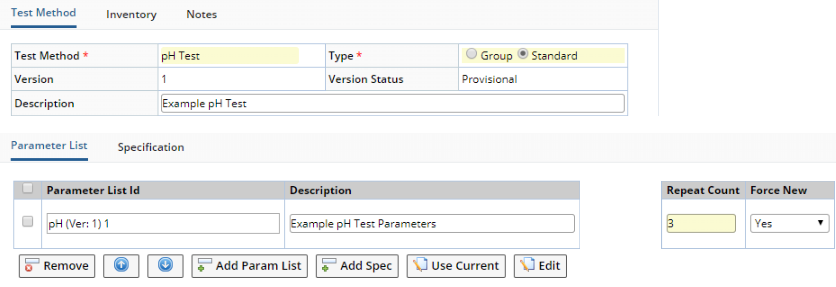

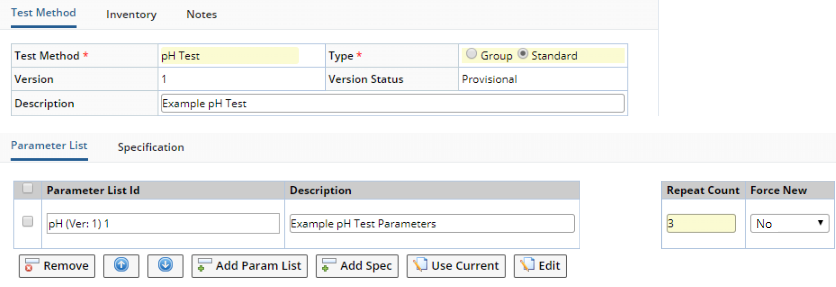

The example below shows the pH Test Method provided in the OOB configuration.

|

Test Method |

Fields of the Test Method form:

| Field | Description | |||

| Test Method | Identifier of the Test Method (preconfigured Workitem). | |||

| Type | Indicates whether this is a Standard Workitem or a Workitem Group. | |||

| Version

Version Status |

Version and Version Status (see Concepts of Versioning). | |||

| Description | Text description of the Test Method. | |||

| Due Date Offset and Due Date Offset Units |

Integer offset (and time units) applied to the Due Date.

These values are copied to a Stability Study when this Test Method is specified. See Adding Working Set of Test Methods for more information. |

|||

| Is Work Plannable | Used with the WAP module. See Introduction to Work Assignment and Planning (WAP). | |||

| Max Activity Size | Used with the WAP module. See Max Activity Size. This is available when "Is Work Plannable" is "By WorkItem" or "By DataSet". | |||

| Testing Lab Type | See Department Maintenance Page

→ Department Form → Hierarchy.

If you choose a Testing Lab Type, you cannot choose a Testing Lab or Work Area, but you can choose a Work Area Type. See Department Maintenance Page → Department Form → Hierarchy. |

|||

| Work Area Type |

See Department Maintenance Page →

Department Form → Hierarchy.

This is available only if you choose a Testing Lab Type. |

|||

| Testing Lab |

Testing Lab associated with this Test Method (see Departments).

You can choose only a Testing Lab, or a Testing Lab and a Work Area in that Testing Lab. If you choose a Work Area, "Testing Lab" is automatically populated with the Parent Testing Lab for the Work Area.

|

|||

| Work Area | Work Area associated with this Test Method (see Departments).

If you specify a Work Area here, all Parameter Lists in this Test Method will default to this Work Area and will be disabled in the Parameter Lists detail. If you do not specify a Work Area here, you can choose a Work Area for each Parameter List in the Parameter Lists detail. If you choose a Testing Lab, the Work Area lookup shows only Work Areas in that Testing Lab. If you choose a Work Area, the "Testing Lab" is automatically populated with the Parent Testing Lab for the Work Area. |

|||

| Auto Assign Rule | Automatically assigns the Test Method to an Analyst or Work Area. For a summary of Work Area assignment functionality, see Assigning Analysts, Work Areas, and Instruments to SDIs. | |||

| Auto Assign Analyst | Analyst automatically assigned to the Test Method if "Auto Assign Rule" is "Analyst". For a summary of Work Area assignment functionality, see Assigning Analysts, Work Areas, and Instruments to SDIs. | |||

| Create LES Worksheet Rule | Determines how the LES Worksheet is generated. See LES WorksheetGeneration. | |||

| Effective Date

Approval Date |

See Concepts of SDI Versioning and Approval. | |||

| Create eWorksheet Rule | Determines how an eForms Worksheet is created (see Workitem in Creating Worksheets). | |||

| Applicable Sample Type | Sample Type to which this Test Method can be applied. | |||

| Support Child Sample Plan | Allows a Child Sample Plan to be included for this Test Method and opens a Child Sample Plan detail after a Save. See BioBanking Master Data Setup → Services for more information. | |||

| Create Child Samples When Applied | If "Support Child Sample Plan" is checked, this option creates Samples when the Test Method is Applied. See BioBanking Master Data Setup → Services for more information. | |||

| Preferred Data Entry View | Determines the Data Entry Grid to use during Data Entry.

|

Inventory |

Fields of the Inventory detail:

| Field | Description |

| Quantity per Repeat | Number of Test Methods per Repeat. |

| Quantity Units | Units for the Quantity per Repeat. |

| Separate Containers per Test | Check if separate Containers will be used for each Test Method. |

| Separate Containers per Repeat | Check if separate Containers will be used for each Repeat. |

| Default # of Repeats | Number of Repeats if none are specified. |

| Destructive Test | Check if the Sample is destroyed during the Test. |

| Container Reuse Test | Check if the Container will be reused. |

These values are used by Stability Studies when this Test Method is specified. See Adding Working Set of Test Methods for more information.

Test Method Details |

|

|

Description |

The Parameter Lists, Specifications, and (if a Group) Test Methods you add here will be associated with a Sample when you add an instance of this Test Method to the Sample.

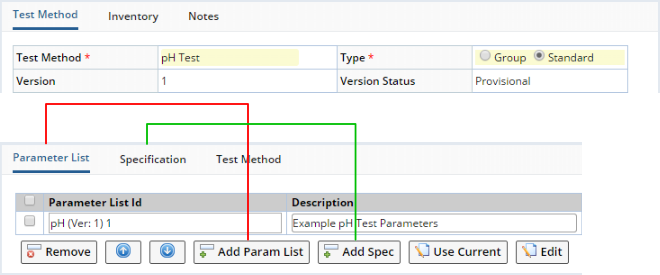

When the "Standard" Type is selected, the Test Method is a Standard Test Method. Accordingly, these detail elements let you add and remove Parameter Lists and Specifications.

|

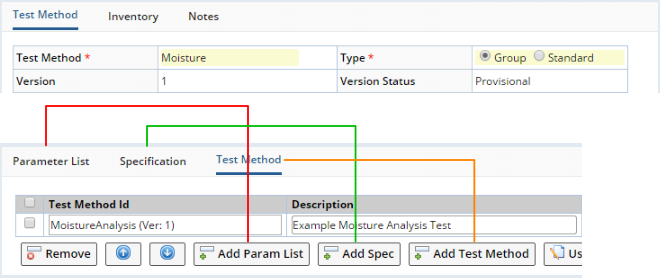

When the "Group" Type is selected, the Test Method is a Test Method Group. In addition to Parameter Lists and Specifications, you can also add other Test Methods. Accordingly, an "Add Test Method" button is added.

|

The "Use Current" button uses the current Version of the selected item, which is then identified as (Ver: C) as shown above. When you add a "Current" Versioned SDI to a Test Method, editing the Current Version presents it as read-only. See Concepts of SDI Versioning and Approval for more information.

When the "Groups" Type is selected, the resulting Workitem Group obviously will contain other Workitems that are "children" of the "parent" Workitem Group. These are identified in the "Tests" detail of the Sample Maintenance pages (see Adding and Maintaining Tests).

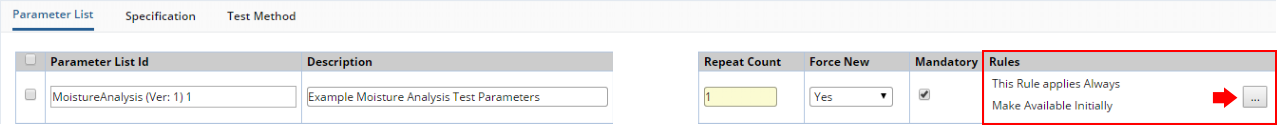

The "Parameter Lists", "Specifications", and "Test Methods" details provide these settings:

| Field | Description |

| Id | Identifier of the item. |

| Description | Description of item. |

| Mandatory | Indicates that the Status of the item must be "Completed" in order for the Workitem to be considered "Completed". For example, a "Mandatory" Parameter List means that the Status of the instance of the Parameter List (Data Set) must be "Completed" in order for the Test Status to be "Completed". The mechanics of this process are covered in Adding and Maintaining Tests. |

| Force New

Repeat Count |

See Force New and Repeat Count. These are provided only in the "Parameter List" detail. |

| Rules | See Rules. This is provided in the "Parameter List" and "Test Method" details. |

| Max Activity Size | This is provided in the "Parameter List"detail. This overrides the respective value specified for the entire Test Method, thus allowing each Parameter List to have its own Max Activity Size. |

|

Auto Assign Rule

Work Area Auto Assign Analyst |

These are provided in the "Parameter List"detail. These override the respective values specified for the entire Test Method, thus allowing each Parameter List to have its own automatic assignment rules. |

Parameter Lists Defining Assignment Rules and Work Areas |

| NOTE: | The behavior described in this section applies to LabVantage 8.4.1 and higher LabVantage 8.4.x Maintenance Releases. |

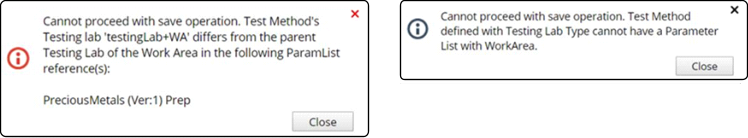

When a Parameter List is added to a Test Method, the Auto Assign Rule, Auto Assign Analyst, and Work Area defined for the Parameter List are copied to the Test Method.

If the Test Method's Testing Lab is not blank, the Parameter List's Work Area is validated to ensure it is in the Test Method's Testing Lab. A Parameter List with a Work Area is not allowed to be added to a Test Method that defines a Testing Lab Type. In both cases, the system shows an alert message and prevents saving of the Test Method with the incompatible values. Examples of these messages are shown below.

|

|

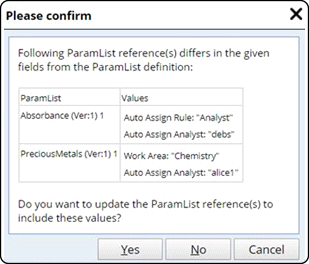

When the Test Method is saved, if the validation finds that the Auto

Assign Rule, Auto Assign Analyst, or Work Area for the Test Method's Parameter

List differ from that defined in the Parameter List itself, the validator

asks for confirmation to copy the values from the original Parameter List

into the Test Method's Parameter List.

The confirmation dialog displays Parameter List's AnalystId only if the Parameter List's Auto Assign Rule is Analyst and its AnalystId differs from the AnalystId defined by the Test Method's Parameter List. The confirmation dialog displays the Parameter List's Work Area only if it differs from the Work Area defined by the Test Method's Parameter List and the Testing Lab of the Parameter List is the same as the Testing Lab of the Test Method. |

Automatically Adding Parameter Lists to Test Methods |

When adding a new Parameter List, you can automatically add the Parameter List to a Test Method. For more information, see Parameter Lists → Automatically Adding Parameter Lists to Test Methods.

Force New and Repeat Count |

|

|

When adding a Test containing Parameter Lists to a Sample, the "Force New" and "Repeat Count" options offer control over the number of Data Sets that are added to the Sample and when they are added. These options can be changed only if the "Always Force New" property in the Data Entry Policy is set to No. The following examples show this behavior. In these examples, the "pH Test" Test Method contains a single "pH" Parameter List.

| Example 1: Force New = Yes, Repeat Count = n |

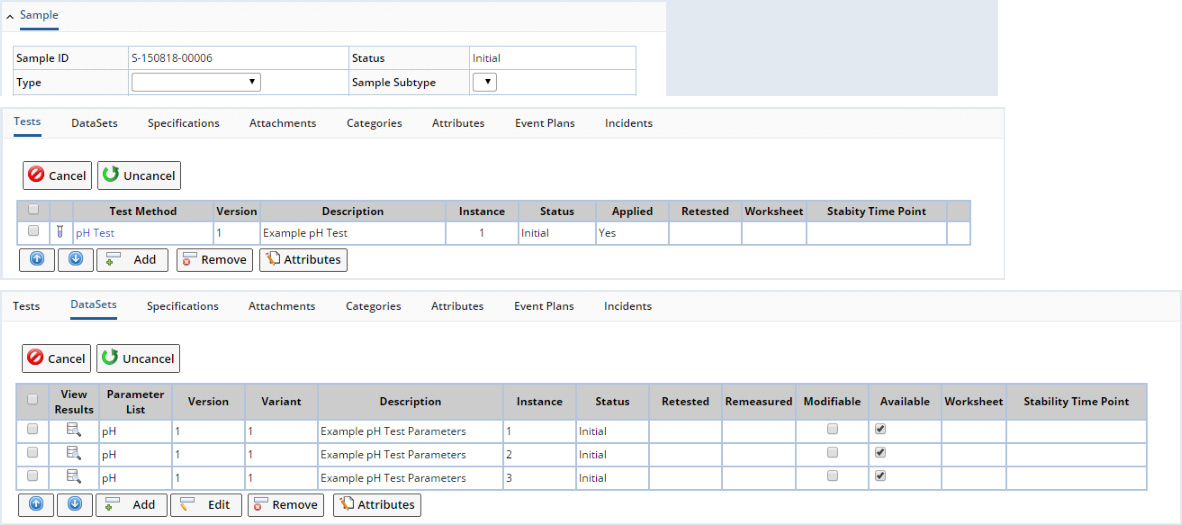

Each time you add the first instance of the "pH Test" Test Method to a Sample, n "pH" Data Sets (instances of the "pH" Parameter List) are always added. Suppose Repeat Count = 3 as shown below:

|

When you add the first instance of the "pH Test" Test Method to the Sample, 3 "pH" Data Sets are added:

|

In the database, one record is added in the SDIWorkitem table (for the Test), and three records are added to SDIData (one for each Data Set):

SDIWorkitem

| KeyId1 | WorkitemId | WorkitemInstance |

| S-150818-00006 | pH Test | 1 |

SDIData

| KeyId1 | ParamListId | DataSet |

| S-150818-00006 | pH | 1 |

| S-150818-00006 | pH | 2 |

| S-150818-00006 | pH | 3 |

When you add another instance of the "pH Test" Test Method to the same Sample, n additional "pH" Data Sets (instances of the "pH" Parameter List) are added to the Sample. Since Repeat Count = 3, adding a second instance of the "pH Test" Test Method to the Sample adds 3 additional "pH" Data Sets:

|

In the database, another record is added in the SDIWorkitem table (for the additional Test), and three additional records are added to SDIData (because there are now six Data Sets):

SDIWorkitem

| KeyId1 | WorkitemId | WorkitemInstance |

| S-150818-00006 | pH Test | 1 |

| S-150818-00006 | pH Test | 2 |

SDIData

| KeyId1 | ParamListId | DataSet |

| S-150818-00006 | pH | 1 |

| S-150818-00006 | pH | 2 |

| S-150818-00006 | pH | 3 |

| S-150818-00006 | pH | 4 |

| S-150818-00006 | pH | 5 |

| S-150818-00006 | pH | 6 |

| Example 2: Force New = No, Repeat Count = n |

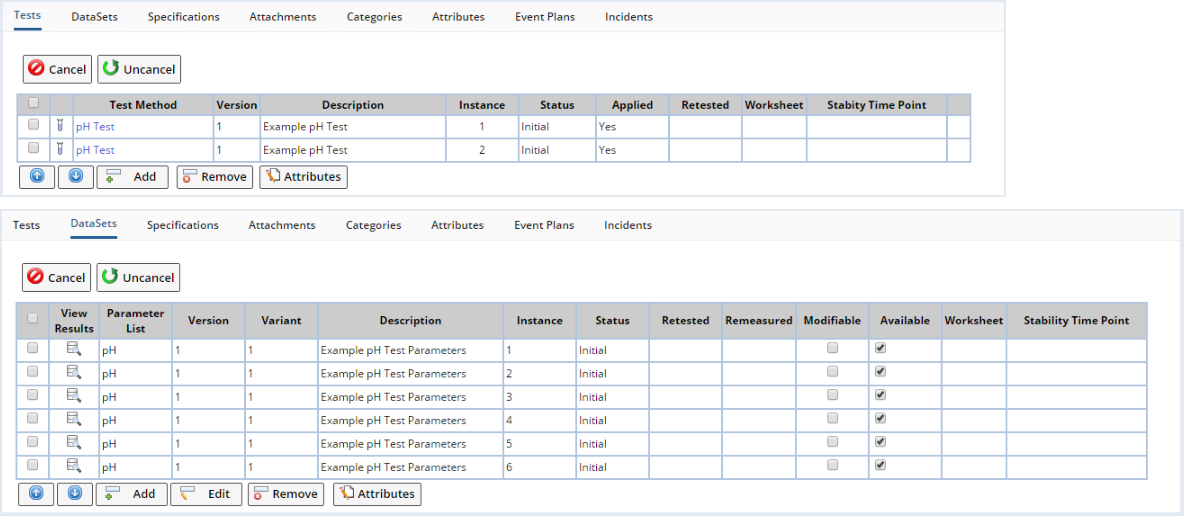

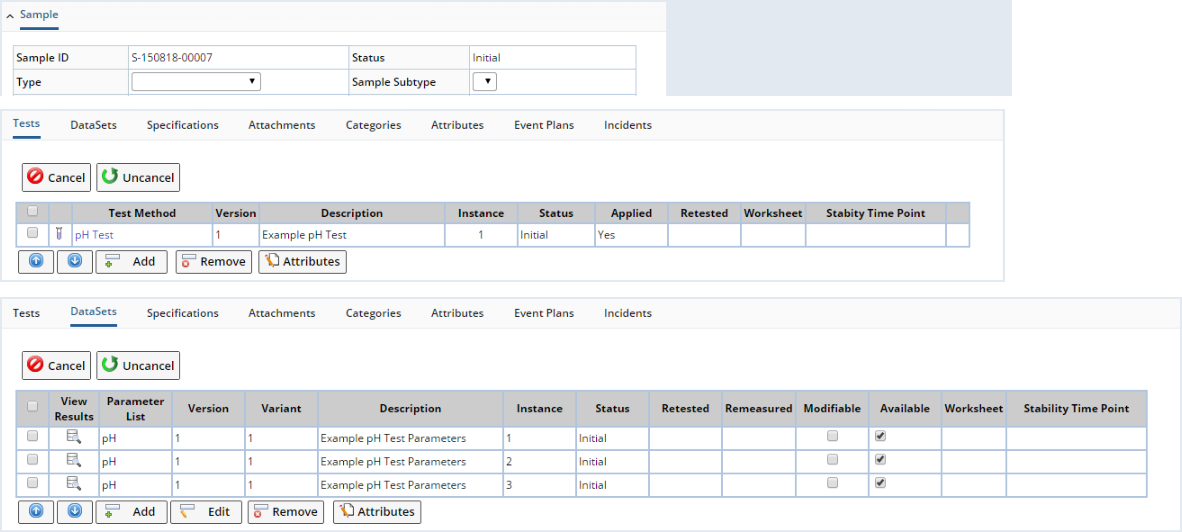

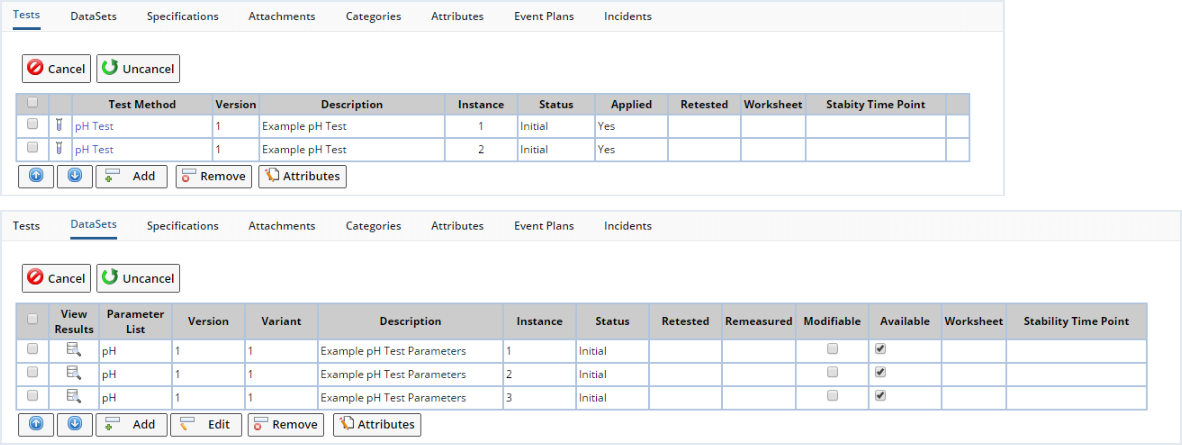

The first time you add an instance of the "pH Test" Test Method to a Sample, n "pH" Data Sets (instances of the "pH" Parameter List) are added to the Sample if no other "pH" Data Sets are already associated with the Sample. Suppose Repeat Count = 3 as shown below:

|

When you add the first instance of the "pH Test" Test Method to the Sample, 3 "pH" Data Sets are added:

|

In the database, one record is added in the SDIWorkitem table (for the Test), and three records are added to SDIData (one for each Data Set):

SDIWorkitem

| KeyId1 | WorkitemId | WorkitemInstance |

| S-150818-00007 | pH Test | 1 |

SDIData

| KeyId1 | ParamListId | DataSet |

| S-150818-00007 | pH | 1 |

| S-150818-00007 | pH | 2 |

| S-150818-00007 | pH | 3 |

However, when you add another instance of the "pH Test" Test Method to the same Sample, no additional "pH" Data Sets are added because there is at least one "pH" Data Set already associated with the Sample:

|

In the database, another record is added in the SDIWorkitem table (for the additional Test), but no additional records are added to SDIData (because no Data Sets were added):

SDIWorkitem

| KeyId1 | WorkitemId | WorkitemInstance |

| S-150818-00007 | pH Test | 1 |

| S-150818-00007 | pH Test | 2 |

SDIData

| KeyId1 | ParamListId | DataSet |

| S-150818-00007 | pH | 1 |

| S-150818-00007 | pH | 2 |

| S-150818-00007 | pH | 3 |

The WorkitemItemId column in the SDIWorkitemItem table records these relationships in decimal notation of the format x.y, where x is used to identify Parameter Lists in a Test Method, and y is used to identify Data Sets (instances of each Parameter List). For example:

If the Test Method contains one Parameter List and 3 Data Sets have been added:

| KeyId1 | WorkitemId | ItemKeyId1 | WorkitemInstance | WorkItemItemId |

| S-150818-00001 | pH Test | pH | 1 | 1.1 |

| S-150818-00001 | pH Test | pH | 1 | 1.2 |

| S-150818-00001 | pH Test | pH | 1 | 1.3 |

If the Test Method contains two Parameter Lists and 3 Data Sets have been aded to each:

| KeyId1 | WorkitemId | ItemKeyId1 | WorkitemInstance | WorkItemItemId |

| S-150818-00002 | Metals | pH | 1 | 1.1 |

| S-150818-00002 | Metals | pH | 1 | 1.2 |

| S-150818-00002 | Metals | pH | 1 | 1.3 |

| S-150818-00002 | Metals Test | Metals | 1 | 2.1 |

| S-150818-00002 | Metals Test | Metals | 1 | 2.2 |

| S-150818-00002 | Metals Test | Metals | 1 | 2.3 |

| Retest Only |

"Retest Only" means that the Parameter List will be added to the Sample only when it is added for the first time, or when doing a Retest operation.

| Force New for Parameter List | Added to Sample when Instance 1 is created? | Added to Sample when Instance 2 is created? | Added to Sample during Retest Operation? |

| Yes | Yes | Yes | Yes |

| No | Yes | No | No |

| Retest Only | Yes | No | Yes |

| AddSDIWorkitem Override |

The "forcenew" property of the AddSDIWorkitem Action can override the Test Method configuration as follows:

| • | "Repeat" means that for each record added to the SDIWorkitem table (for additional instances of the same Test Method), the specified number of additional records are added to SDIData. |

| • | "Link" means that for each record subsequently added to the SDIWorkitem table after the first instance (for additional instances of the same Test Method), no additional records are added to SDIData. |

| AddSDIWorkitem "forcenew" | N | Y | Not Specified | R |

| Data Entry Policy "Always Force New" = Yes | Repeat | Repeat | Repeat | Repeat |

| Data Entry Policy "Always Force New" = No | ||||

|

Test Method "Force New" = Yes |

Repeat | Repeat | Repeat | Repeat |

|

Test Method "Force New" = No |

Link | Repeat | Link | Link |

|

Test Method "Force New" = RetestOnly |

Link | Repeat | Link | Repeat |

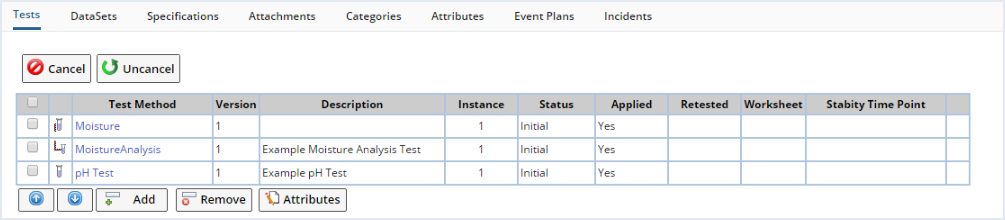

Test Method Groups |

|

|

As noted in the Overview, a "Test Method Group" can host Parameter Lists, Specifications, and other "Standard Test Methods". In the example below:

| • | The "Moisture" Test Method is a "Test Method Group" that contains the "Moisture Analysis" Test Method. |

| The "pH Test" Test Method is a "Standard Test Method". |

The Tests detail identifies their relation to each other:

|

| Icon | Description | |

|

Parent Test Method Group | |

|

Child Test Method (child of the parent Test Method Group) | |

|

Standalone Test Method (Test Method with no parent Test Method Group) |

When moving these items:

| Move | Select... | Result | |||||||

|

Parent Test Method Group | Entire Test Method Group moves upward (parent and its children),

depending on the row above the selected parent:

|

|||||||

| Standalone Test Method | Depends on the row above the selected standalone:

|

||||||||

| Child Test Method | Child Test Methods can move only within a Test Method Group, so the row above will always be a child. The selected child therefore moves above the child in the row above. Nothing happens if the row above is a parent. | ||||||||

|

Parent Test Method Group | Entire Test Method Group moves downward (parent and its

children), depending on the row below the selected parent:

|

|||||||

| Standalone Test Method | Depends on the row above the selected standalone:

|

||||||||

| Child Test Method | Child Test Methods can move only within a Test Method Group, so the row below will always be a child. The selected child therefore moves below the child in the row below. Nothing happens if the row below is a parent or standalone. |

The SDIWorkitem List page described in Manage Tests lists only child and standalone SDIWorkitems (Tests). Parent SDIWorkItems are not listed.

Rules |

|

|

Overview |

This section describes Rules that can be applied to determine the behavior of Test Methods.

Rules for Parameter Lists |

These Rules can be defined for Parameter Lists:

| • | "Availability Rules" determine when an existing Data Set is available for Data Entry. |

| • | "Add Rules" determine when a new Data Set is added. |

| • | "Include Rules" determine when to apply the "Availability Rules" and "Add Rules". |

The above Rules are configured in the "Parameter List" tab for both Standard Workitems and Workitem Groups.

|

|

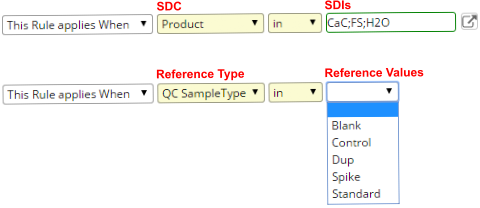

"Include Rules" determine when to apply the "Availability Rules" and "Add Rules". The default is "This Rule Applies Always". |

| This Rule Applies When |

"This Rule Applies When" applies the Availability or Add Rules only for the specified SDCs or Reference Type. The Parameter List (SDIWorkitemItem) is added to the SDI. The Availability Rules determine when it becomes available.

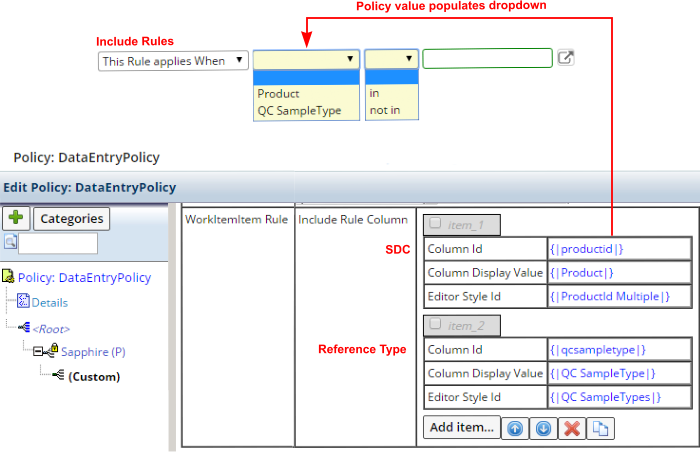

|

The SDC or Reference Type is configured using the Data Entry Policy property "WorkitemItem Rule → Include Rule Column" as shown below.

|

When "This Rule Applies When" is used with an Add Rule:

| • | The Parameter List (SDIWorkitemItem) is created only if the column value matches for the SDI (such as productid must be CaC, FS, or H2O). |

| • | If the column value does not match when the Workitem is applied to the SDI, the Parameter List Rule is considered not applicable to the SDI. The corresponding SDIWorkitemItem is therefore not added to the SDI for that Parameter List. |

When "This Rule Applies When" is used with an Availability Rule: If the column value does not match, the Default Procedural Availability Rule is used.

| This Item Applies When |

"This Item Applies When" works in a manner similar to "This Rule Applies When", with the exception that "This Item Applies When" requires that the defined conditions are met before adding the Parameter List (SDIWorkitemItem) to the SDI.

Availability Rules and Add Rules can be triggered by Parameter Limit Status, Parameter Limit Type Status, and Spec Condition of Data Items.

|

Default Availability Rules:

|

Rules Triggered by Parameter List Status |

|

Make Available or Add when:

|

|

Make Available or Add when:

|

|

Make Available or Add when:

|

Rules Triggered by Parameter Limit Type Status |

|

Make Available or Add when:

|

|

Make Available or Add when:

|

|

Make Available or Add when:

|

|

Make Available or Add when:

|

|

Make Available or Add when:

|

|

Make Available or Add when:

|

Rules Triggered by Spec Condition of Data Items |

|

Make Available or Add when:

|

|

Make Available or Add when:

|

|

Make Available or Add when:

|

|

Make Available or Add when:

|

|

Make Available or Add when:

|

|

Make Available or Add when:

|

Trigger Time Control |

|

Rules can be defined to execute after a specified time interval has passed with respect to any date field of Sample, Test (SDIWorkitem), or Data Set (SDIData). The appropriate Action is added to the ToDo List with the Due Date calculated by the Rule. |

|

Options are shown in the combinatorial example above. For the Availability and Add Rules has Status = Started, only the Started Date of the Data Set is considered. |

|

For all other Availability and Add Rules, the latest date among all Data Sets of the Parameter List is considered. |

Rules for Test Methods |

All "Application Rules" add Child Test Methods (Child Workitems) to the SDI, but each Rule determines when the Child Test Methods are applied to the SDI. "Application Rules" are configured in the "Test Method" tab for Workitem Groups.

|

|

Adding and Applying Tests is discussed in Adding and Maintaining Tests → Adding Tests to Samples → Adding Versus Applying Tests.

When the Workitem Group is added to an SDI:

| • | "Apply Initially" (default) adds and applies the Child Test Methods (Workitems) to the SDI. |

| • | "Apply Manually" adds the Child Test Methods to the SDI but does not apply them. |

| • | "Apply When" adds the Child Test Methods to the SDI and applies them according to the Rules. |

This can be used with the Include Rule to base the selected operation on the column value of the SDI. This is configured using the Data Entry Policy in the same manner as the Include Rules. When the Workitem Group is applied to the SDI and the column value does not match, that specific Child Workitem is considered to be not applicable for the SDI. The corresponding SDIWorkitemItem is therefore not created for the Child Workitem in the Workitem Group.

A Trigger Time Control similar in functionality to the Trigger Time Control for Availability and Add Rules, except that Data Sets (SDIData) are excluded (the time interval is with respect to any date field of Sample or Test (SDIWorkitem).

In the following descriptions of Application Rules, the Workitems referenced in each Rule are in the same (current) Workitem Group.

Apply by Workitem Status |

|

Apply when:

|

|

Apply when:

|

Apply by Spec Condition of Data Items |

|

Apply when:

|

|

Apply when:

|

|

Apply when:

|

|

Apply when:

|

Reflex Rules |

"Reflex Rules" conditionally add and apply SDIWorkitems (Tests) associated with these SDCs based on an event in a different SDIWorkitem. Reflex Rules are defined in the "Test" tab of the sdidetailmaint(workitem) Element in the Product, Sample Point, Consumable Type, and Location Maintenance pages.

You can define Reflex Rules for Standard Workitems only. You cannot specify Reflex Rules for Workitem Groups or their Child Workitems.

When an SDC with a Reflex Rule (other than the default) is added to a Sample, a "Virtual Workitem Group" is created. It is assigned the WorkitemId "_Reflex". This is an SDIWorkitem Group that is hidden throughout the user interface (Test Method List page, SDIWorkitemList page, "Test" tab on Sample detail, and the Navigator). Reflex Rules are persisted in SDIWorkitem.ReflexRule.

In general, the behavior of a Virtual Workitem Group is similar to any other Workitem Group. However, the Virtual Workitem Group always precedes all other WorkItem Groups added to the Sample from the relevant SDC (such as Product). When any SDIWorkitem of the source SDI (such as Product, SamplePoint, etc.) has a Reflex Rule applied, the CopySDIDetail Action creates a Virtual Workitem Group.

Reflex Rules do not specify Include Rules. Similar in principle to Application Rules, Reflex Rules always add the Workitems to the SDI, but the Rules determine when they are applied to the SDI.

When the SDC (Product, SamplePoint, ReagentType, or Location) is added to an SDI (such as Sample):

| • | "Add & Apply" (default) adds and applies the Workitems to the SDI according to the Reflex Rules. |

| • | "Add Without Applying" adds the Workitems to the SDI according to the Reflex Rules but does not apply them. |

Adding and Applying Tests is discussed in Adding and Maintaining Tests → Adding Tests to Samples → Adding Versus Applying Tests.

Reflex Rules Triggered by Workitem Status |

|

Add & Apply or Add Without Applying when:

|

|

Add & Apply or Add Without Applying when:

|

Reflex Rules Triggered by Spec Condition of Data Items |

|

Add & Apply or Add Without Applying when:

|

|

Add & Apply or Add Without Applying when:

|

|

Add & Apply or Add Without Applying when:

|

|

Add & Apply or Add Without Applying when:

|

| NOTE: | When multiple instances of the same Workitem are present you are prompted to specify the instance number. |

Detail Elements |

|

|

Some changes made to detail elements may require a page refresh before taking effect.

| Detail Element | Description |

| Forms | Adds Worksheet Forms of Worksheet Type "Workitem". See eForm Worksheet Principles → Creating Worksheets → Workitem. |

| Assay Types | Associates the Test Method with an Assay Type. See BioBanking Master Data Setup → Assay Types. |

| Categories | Adds the Test Method to a Category. |

| Test Attributes | Allows both Test Attributes and Data Set Attributes to be added to the Test Method. See Attributes → Linking Data Set Attributes to Parameter Lists in Test Methods. |

| LES Text | Attributes that define Instructions and Information that are rendered by each Attribute Control in an LES Worksheet. See LES Worksheet Generation. |

| Worksheet Templates | Defines the LES Worksheet Templates that are used to create an LES Worksheet. See LES Worksheet Generation. |

| Workflows | Workflow Execution to which the Test is added. See Workflow Definition and Execution Conceptual Reference → Adding SDIs to a Workflow → Templates and Test Methods. |

| Equipment | See Associating Instruments with Test Methods. |

| Consumable Types | See Associating Consumable Types With Test Methods. |

| Resource Planning | This is used with the WAP module. See WAP Resources. |

Versioning |

|

|

"New Version" and "Approve Version" |

Test Methods are Versioned, allowing you to use the "New Version" button on the Test Method List page and the "Approve Version" button on the Test Method Maintenance page to maintain Versions. For more information, see these sections in Concepts of Versioning:

| • | SDI Versioning |

| • | Product, Test Method, and QCMethod Versioning |